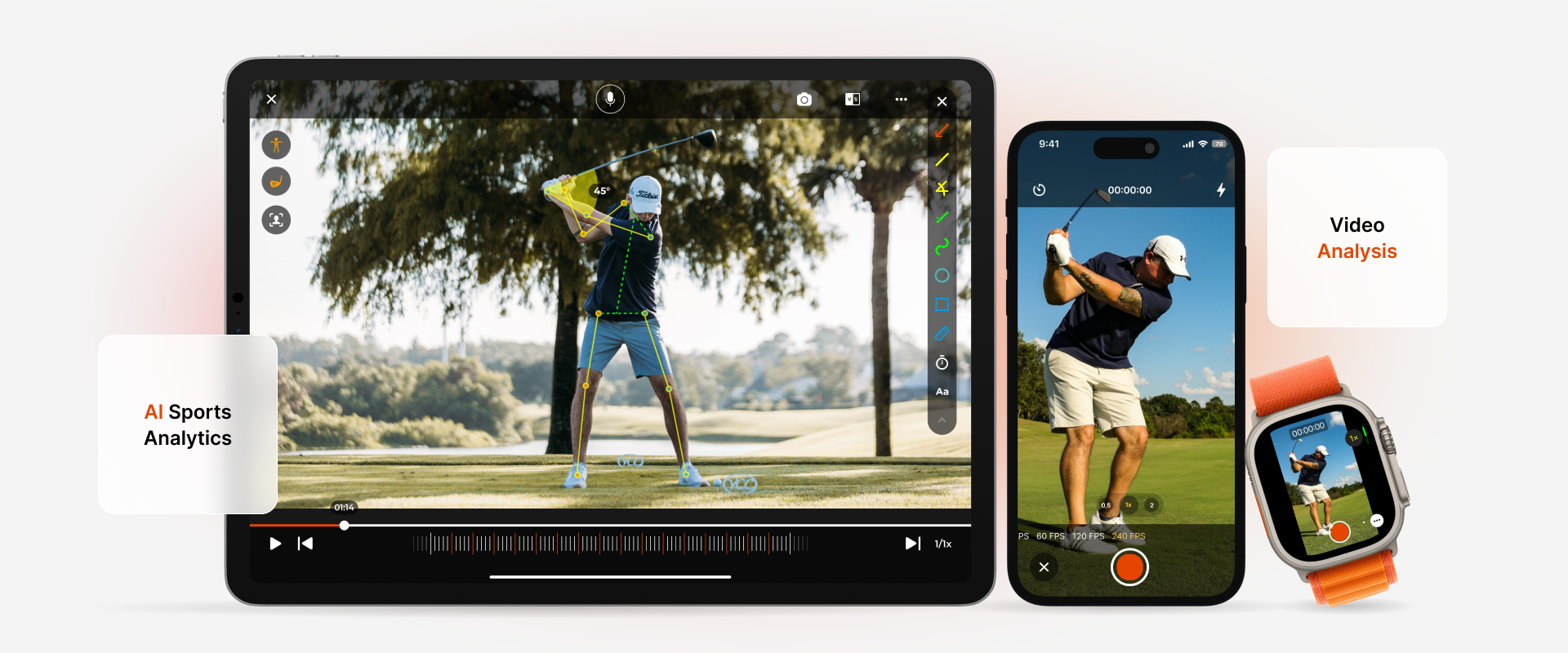

Smartphones have turned everyone into a casual videographer, but raw footage is only half the story. A new generation of video analysis app is closing the gap between recording and understanding, giving athletes, coaches, educators, and content creators the kind of frame-by-frame insight that used to require a broadcast studio. By combining on-device AI, cloud-based acceleration, and intuitive gesture controls, these apps transform hours of video into seconds of actionable data—no cables, no desktops, no PhD in computer vision.

At the heart of the experience is automatic scene detection. The moment you hit “import,” convolutional neural networks scan for scene changes, object motion, and audio spikes, instantly cutting the clip into logical chapters. A youth-soccer coach can see every goal attempt isolated in under five seconds; a dance teacher can jump straight to every pirouette without scrubbing the timeline. The algorithm is optimized for mobile ARM chips, so even a three-year-old iPhone can process 4K footage in real time while the device stays cool.

Once the scenes are tagged, the app switches to tracking mode. Users tap on any object—runner, ball, drone, pet—and the tracker locks on, predicting trajectory across frames using optical-flow and Kalman-filter fusion. A slider superimposes the path directly onto the video, turning a tennis serve into a neon ribbon that reveals racquet-head speed and ball spin. Swipe up and the ribbon converts into a quantified dashboard: peak velocity, launch angle, acceleration curve, all synced to the millisecond. Coaches export the graphic as an MP4 overlay or a CSV for deeper spreadsheet work.

For team sports, multi-object tracking is the killer feature. The app recognizes jersey colors, assigns IDs, and builds mini heat-maps for every player. After a five-a-side scrimmage, the coach sees a bird’s-eye view of the pitch with occupancy gradients, pass networks, and pressing intensity. One button generates a shareable web link; players open it on any browser and scrub through the match without installing anything. The same engine powers security and retail use cases—store owners can quantify shopper flow, dwell time, and conversion hotspots without extra hardware.

Slow-motion is no longer limited to 240 fps flagships. The app can interpolate frames using AI upsampling, turning 30 fps footage into silky 480 fps replays. A skateboarder can review a trick at 1/16 speed and still scrub frame-by-frame without stutter. Meanwhile, a stabilization network removes rolling shutter and camera shake, so even chest-mounted GoPro clips look tripod-steady. The stabilized output is non-destructive; toggle the original at any time to see the raw clip for comparison.

Voice-activated tagging keeps hands free on the field. A fitness influencer can say “mark it” every time a rep is completed; the app drops colored keyframes that later become a highlight reel. Natural-language search turns those tags into a personal Google: type “failed deadlifts” and the timeline jumps straight to every missed lift, complete with bar-path tracing and lumbar-spine angle warnings. For educators, the same engine auto-generates quiz questions: the app freezes a video frame and asks students to predict the next event, turning passive watching into active learning.

Privacy is engineered, not bolted on. All processing runs on-device by default; cloud mode is opt-in and end-to-end encrypted. Faces are blurred automatically unless the subject consents with a QR-code waiver. Coaches can share tactical clips without exposing athlete identities, and parents can upload youth-game footage without worrying about facial recognition scraping. If the user chooses cloud analytics, data is sharded across multiple jurisdictions and deleted after 30 days unless explicitly archived.

Monetization is usage-based rather than subscription-heavy. Casual users get 50 analysis minutes free every month; power users buy packs of GPU minutes like mobile data. Teams can purchase a white-label SDK that drops the entire engine into their existing app within three API calls. Early adopters include a Bundesliga academy, a U.S. high-school drone-racing league, and an online yoga platform that now offers AI-corrected posture scores for every asana.

Looking ahead, the roadmap includes 3-D skeletal tracking without markers, real-time audio feedback via AirPods (“elbow angle 42 degrees, extend more”), and federated learning that improves the global model every time a local device processes a clip. The long-term vision is ambient video intelligence: any camera, anywhere, becomes a data-collection node that respects privacy and delivers insight before the user even asks.

Until then, the current generation of video analysis app is already turning weekend warriors into data-driven athletes, TikTok dancers into biomechanics students, and home movies into searchable knowledge bases—all from the phone already in your pocket.